By David Gerhardt, Ph.D.

Most of our day-to-day activities tolerate failure pretty well. That new recipe didn’t pan out, but take-out burritos can save the day (again). However, other scenarios can be less forgiving.

As a thought experiment, pretend you’re in an aluminum can on the surface of the moon in the late 1960s. This particular can has just one engine capable of getting you back into orbit to catch your ride back to Earth. Because you need to be as light as possible, you also must leave your landing legs behind (i.e., you don’t get to try again if it ‘kinda’ works). Worse still, it will take another 33 years for a poet to capture your predicament accurately: “You only get one shot, do not miss your chance to blow.”

As you shakily reach for the button, you think back to the Verification & Validation process that makes you fairly certain you’ll see your family again. How did the subsystem your life depends on go from initial fabrication to a trusted piece of hardware?

Going back to basics: Verification

Generally, trust is earned over time as things perform as expected. This is true not only for humans but also for the deliverables they create. Specifically, engineers assign trust incrementally as they confirm that a piece of hardware (a.k.a. a product) meets its previously-agreed responsibilities. We call this process Verification. The critical question Verification aims to answer is: “does this product do what we said it would?”

To answer this question, we have to look back at the statements that codify its responsibilities: the requirements.

Again we’ve arrived at a point where good requirements make all the difference in a project. Good requirements are verifiable– in other words, engineers can take specific actions to check if they are fulfilled. Not only that, good requirements call out one or more specific methods that are acceptable for Verification. You’re probably aware of the classical set of Verification methods: Inspection, Demonstration, Analysis, and Test. These are acceptable activities that prove a product is indeed doing what we said it would.

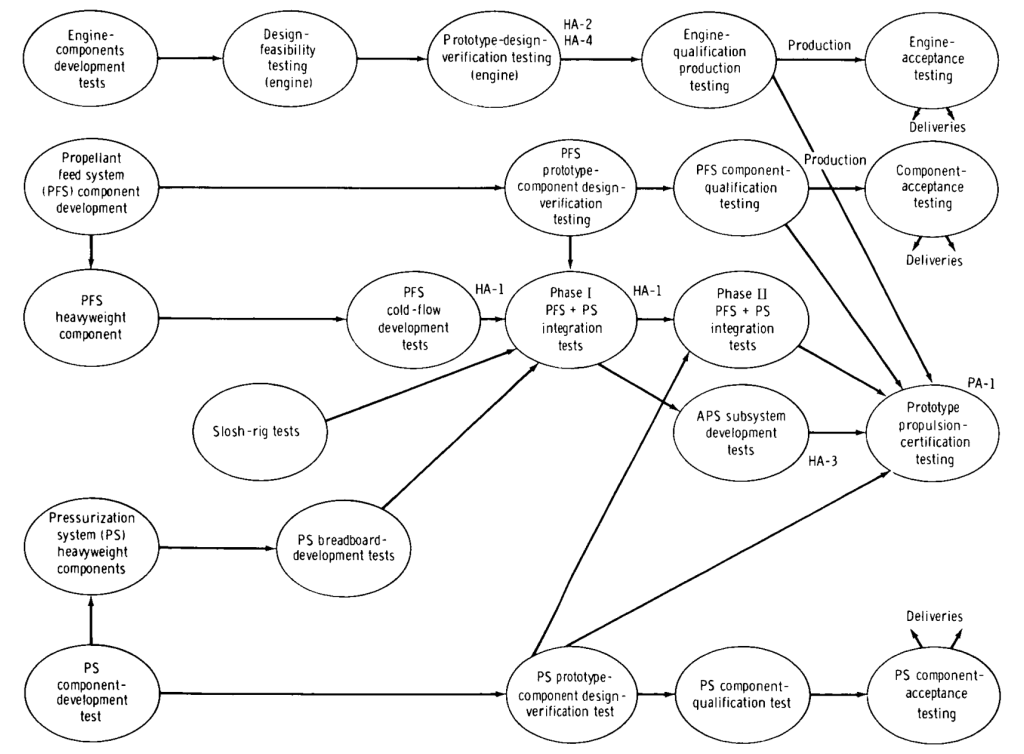

Verification does not happen all at once, nor is it limited to the period just before delivery. Engineers continually compare against requirements as pieces are manufactured, as those pieces glom into larger and larger assemblies, and as the entire system comes together. The figure below shows the major phases of the Verification process used for the Ascent Propulsion Subsystem (APS) responsible for getting Apollo astronauts off the surface of the moon.

This incremental Verification process takes significant planning, documentation, and time, but it minimizes surprises and usually makes for a more efficient project. Think of Verification like checking your map during a wilderness race – it means pausing for a moment, but you should do it as often as necessary to avoid wasting time by moving forward in the wrong direction. There is no universal standard for how often to check your ‘map’ – it depends on the length of the race and how difficult the terrain is. In other words, it’s up to the Systems Engineering team to tailor the Verification Plan to the unique needs of each project.

At any point in the Verification process, from the smallest component to the full system, engineers may observe a difference between what should have happened vs. what did happen. Identifying these discrepancies (including the full context for them) and raising a flag that they have occurred is critical to the Verification process.

Once discrepancies have been identified, the engineering team studies it and decides how to respond. Possible responses are rejecting the discrepancy as invalid (due to an error in the Verification process), waiving the associated requirement(s), or initializing a product modification & re-verification.

While it is easy to downplay discrepancies (“well, it almost met its requirement…”), or to blame Verification engineers for the problems uncovered, both are grave mistakes that miss the point of Verification. If hardware has not earned our trust, it is a mistake to assign it trust anyway in an effort to a) save face or b) maintain a schedule. It is not useful or helpful to ignore problems or to blame the engineers working to reveal these inherent problems.

Sound engineering requires honesty and courage to raise a red flag, and respect for those who have done so. Nobody wants to slow down a project, but organizations must have the discipline to be thankful to uncover problems now rather than in operation. This gives them the most time and energy to fix the problem.

The customer is always right: Validation

While Verification compares a deliverable to its associated requirements, Validation demonstrates the deliverable and gauges the customer’s satisfaction. The critical question of Validation is, “did we build the right thing?”.

‘Valid’ is sometimes an overloaded word, even among Systems Engineers. Early in a project, engineers talk of ‘valid’ requirements. These are the accepted requirements which a) meet criteria for what makes a `good` requirement (e.g., concise, unambiguous, feasible, verifiable, etc.), and b) accurately capture the customer needs. Requirements which are ‘valid’ can, and should, be used in the product’s design.

The other, more frequent use of Validation (and the use referenced in the acronym ‘V&V’) occurs later in the project and is specifically referenced as Product Validation. This Validation effort requires an end product which has completed its Verification process. Ideally, Validation allows the customer and/or operator to interact with the product in the previously-defined environment or operational scenario.

An overly-simplified example of Validation is when the waiter lets you taste the first sip of wine for your approval. The wine has already been produced and Verified internally by the master winemaker. The same process (e.g., tasting a sample) is used for both Verification & Validation; the difference is who performs the process.

A more complex example is having an astronaut interact with the Ascent Propulsion Subsystem in a simulated environment (full Validation in the operational environment is not always possible). In this scenario, the astronaut runs through the APS procedure while engineers check to see if their product (which already performs as the requirements have dictated) performs as the astronaut expects it to. Would re-labeling buttons or adding safeguards help the astronaut effectively use the product?

Bringing it all together: V&V

Verification & Validation ends with a product that is ready for delivery to the customer. The product has met all its associated requirements, and the customer (or operator) is satisfied after interacting with it. V&V can be a long and laborious process, but it is necessary when hardware must be trusted for a critical job, like starting the long journey back home.

References

- https://www.nasa.gov/sites/default/files/atoms/files/nasa_systems_engineering_handbook_0.pdf

- https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19730010173.pdf

Want to work with us? We’re hiring! First Mode draws on the exceptional talent and creativity of its multidisciplinary team to solve the toughest problems on and off the planet. Check out our open positions in Seattle and Perth.